How do patients feel about AI in healthcare?

As AI grows in healthcare, 60% of patients worry it erodes trust. Many fear it’s moving too fast and weakening human connection.

Popular articles

Providers should prepare to field patient concerns as healthcare systems increasingly integrate artificial intelligence. Knowing how patients feel about AI in healthcare can help clinicians engage more thoughtfully with this technology in clinical practice.

How is artificial intelligence being used in healthcare?

Patients and providers may not notice artificial intelligence’s presence, but AI plays a more significant role in healthcare technology every month. For example, chatbots and virtual health assistants rely on generative AI technology to answer common questions, and large hospitals use AI-powered software to optimize staffing and resource allocation based on historical data.

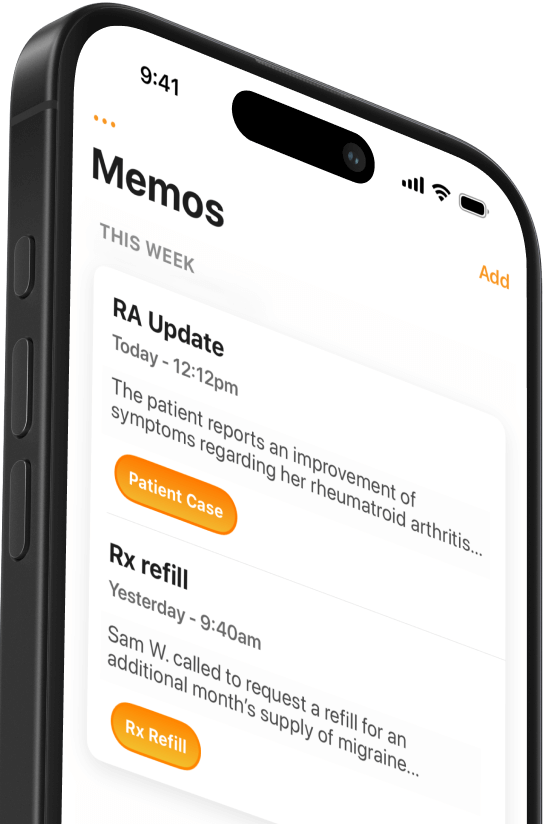

AI also plays a growing role in clinical practice. Generative AI enhances clinical decision support systems by offering insights based on patient data, medical literature, and best practice. Additionally, AI-based scribes use natural language processing to record patient visits and automate clinical documentation.

Some surveys show clinicians already use generative AI tools to assist with 1 in 10 clinical decisions.

How patients feel about AI

Public attitudes toward artificial intelligence are still shaping as AI technologies become commonplace across industries. Here’s what we currently know regarding how patients feel about AI in healthcare.

Patients are uncomfortable with providers relying on AI for treatment

Patents have nuanced views about artificial intelligence in healthcare, which vary by demographic group. However, one trend is consistent: most patients are uncomfortable with providers relying on AI for treatment decisions.

A Pew Research Center survey found that 6 in 10 Americans would feel uncomfortable if their healthcare provider relied on AI for their medical care. Other surveys have found that 7 in 10 U.S. adults are concerned about the increasing use of AI in healthcare.

These surveys are consistent with nationally representative data published in JAMA Network Open, which found that 70% of respondents were somewhat or very uncomfortable with receiving a diagnosis from an AI algorithm that was 90% accurate.

“Six-in-ten U.S. adults say they would feel uncomfortable if their own health care provider relied on artificial intelligence to do things like diagnose disease and recommend treatments.”

Pew Research (2022)

Comfort with AI varies by clinical application

While most Americans are uncomfortable receiving AI-informed treatment, feelings vary by clinical application. For example, here is the percentage of respondents who say they are comfortable with AI doing some of the things their doctor usually does:

- Reading your chest x-ray (54%)

- Recommending the types of antibiotics you get (52.5%)

- Making the diagnosis of pneumonia (48%)

- Telling you that you have pneumonia (37%)

- Making the diagnosis of cancer (31%)

- Telling you that you have cancer (18%)

Notice that patients are generally more comfortable with AI playing a diagnostic role than with the same technology communicating results.

Views are mixed regarding whether AI leads to better health outcomes

While most patients remain uncomfortable with AI playing a role in their treatment, about half believe the technology improves health outcomes. For example, in one study, 55% of patients said they think AI will make healthcare care better or much better, while just 6% believe it will make healthcare worse.

Many patients believe AI can reduce mistakes made by healthcare providers

Patients are uncomfortable with providers using AI for treatment, but most think it will improve healthcare. While this may initially seem contradictory, one explanation could be that patients see a role for AI in reducing error.

A larger share of Americans think AI use in health and medicine would reduce (40%) rather than increase (27%) mistakes made by providers.

Americans see potential for AI to reduce problems with racial and ethnic bias

Among patients who see a problem with racial and ethnic bias in health care, over half believe AI would improve the issue of bias and unfair treatment.

Other surveys find that non-white respondents are more likely to be concerned about AI’s unintended consequences.

There’s broad concern about AI impacting the personal connection between patient and provider

In addition to Americans’ discomfort with receiving AI-based treatment, another trend is consistent: patients worry it will negatively impact patient-provider interactions. Patients want to connect with their doctors and see technology as a barrier.

“But there is wide concern about AI’s potential impact on the personal connection between a patient and health care provider: 57% say the use of artificial intelligence to do things like diagnose disease and recommend treatments would make the patient-provider relationship worse. Only 13% say it would be better.”

Pew Research (2022)

Americans are worried providers will adopt AI technologies too fast

Perhaps the rapid proliferation of AI bots like ChatGPT is to blame, but Americans are more concerned about healthcare providers adopting AI too fast than too slowly. Three-quarters of patients are worried healthcare will move too quickly using this technology before fully understanding the risks for patients.

Patients’ top concerns relate to AI’s unintended consequences, including misdiagnosis, privacy breaches, less time with clinicians, and higher healthcare costs.

Recommendations for providers

As AI-based technology plays a more prominent role in healthcare administration and clinical practice, providers will benefit from educating themselves and patients about the benefits and limitations of this technology. Start with your own education by understanding the recent emergence of generative AI and its role in healthcare.

When incorporating AI technology in clinical practice, remember that patients remain skeptical. Most Americans are uncomfortable with AI-based treatment and feel worried it will disrupt the human connection with their healthcare provider.

Providers should speak openly with patients about the role of AI in treatment decisions while continuing to practice excellent patient communication. Overall, patients see AI's potential to reduce errors and improve healthcare. However, Americans prefer that healthcare providers take a cautious approach and not rush into using new AI technology too quickly.

Related Articles

We Get Doctors Home on Time.

Contact us

We proudly offer enterprise-ready solutions for large clinical practices and hospitals.

Whether you’re looking for a universal dictation platform or want to improve the documentation efficiency of your workforce, we’re here to help.